Anthropic’s Agent SDK is essentially the Claude Code agent as a library for Typescript or Python. It makes it trivial to build agents for other purposes than coding using the same agent harness. You define the tools and skills and the right sandboxed environment and you can now prompt an agent to work towards a goal.

Posts, page 1 of 7

Thursday, 8th January 2026

Monday, 3rd November 2025

I've been reacquainted with Ruby lately. I had it lying around on my machine but couldn't quite remember how I managed versions and switched between them. It turned out I was using Homebrew, ruby-install, and chruby. I updated the packages to their latest versions, then installed the latest version of Ruby:

brew upgrade ruby-install chruby

ruby-install 3.4

This installed Ruby 3.4.6.

If configured, chruby will automatically switch to the current version of Ruby when you cd into a directory. It looks for a .ruby-version file.

A newer alternative

I recently came across Mise, a "polyglot tool version manager". It aims to replace many version managers like nvm, ruby-install, and so on. I installed Mise (after editing my .zshrc file) and ran mise use -g ruby@3.4. It worked fine. Mise also manages environment variables and includes a task runner. I also installed Node.

Mise looks promising. For me, the main benefit is improved shell start-up time. My machine is a 2015 MacBook, so it's a bit slow when loading multiple version managers. It took about 1 or 2 seconds to start a shell with nvm and chruby in the mix; with Mise the shell starts immediately.

I'll give it a go for a while.

Update

After trying to install ruby gems, I was getting mixed success.

The Problem: I was running Ruby 3.4.7 (via mise), but gem env showed gems were being installed to my old Ruby 3.2.0 directory. The gems existed, but their compiled C extensions were built for 3.2.0 and couldn't work with 3.4.7.

The Cause: Old GEM_HOME or GEM_PATH environment variables in my shell config, pointing to the 3.2.0 installation and overriding mise's proper paths.

The Fix:

unset GEM_HOME

unset GEM_PATH

# Reload shell

source ~/.zshrc

# Reinstall gems

Tuesday, 21st October 2025

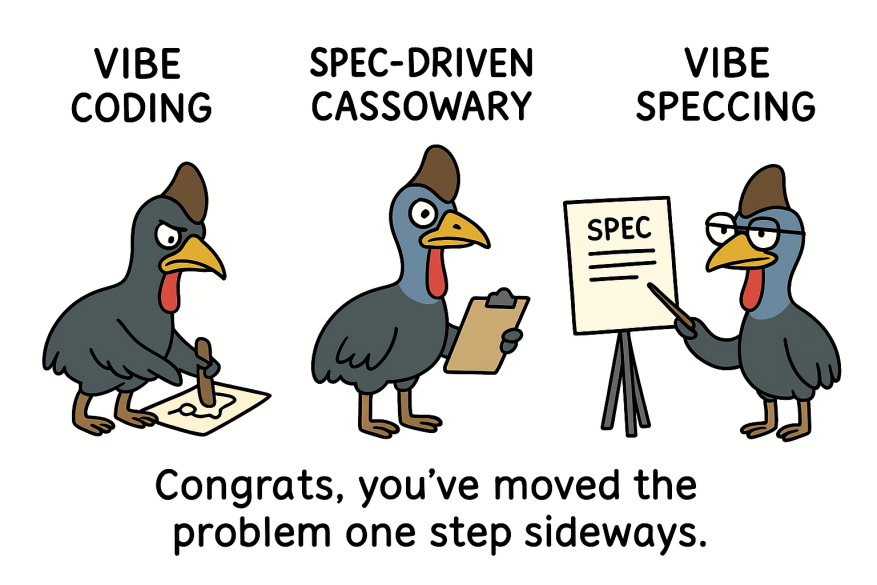

The past has shown that the best way for us to stay in control of what we’re building are small, iterative steps, so I’m very skeptical that lots of up-front spec design is a good idea, especially when it’s overly verbose. An effective SDD tool would have to cater to an iterative approach, but small work packages almost seem counter to the idea of SDD.

Wednesday, 15th October 2025

I'm pairing with Claude Code to build a progressive web app, but Service Workers weren't working. The feedback loop between testing in the browser and reporting what I was seeing in Chrome DevTools was painfully slow.

I wondered: is there a DevTools MCP?

There is! On 23 September 2025, the Chrome team blogged about Chrome DevTools (MCP) for your AI agent.

I added it to Claude Code with a single command:

claude mcp add chrome-devtools npx chrome-devtools-mcp@latest

After starting Claude Code, I tested it:

Please check the LCP of web.dev.

It worked!

Now for the real problem. I put Claude Code to work:

Research why my PWA service worker isn't registering in Chrome DevTools. Check the manifest, service worker file, and registration code. Create a plan to fix it, then implement.

After 5 to 10 minutes of debugging attempts, Claude Code concluded that we needed to migrate away from next-pwa to a modern successor called Serwist.

That's when it hit me: I hadn't noticed that Claude Code had selected next-pwa, a library whose last release was in December 2022. When working with teams of human software developers, one of my responsibilities is guiding them to choose dependencies carefully. "Popularity and adoption" is always a key factor.

It turns out Claude Code needs the same guidance.

8:55 AM #claude-code #software-engineering-management in posts

Tuesday, 14th October 2025

I recently discovered there are two different ways to connect Claude and GitHub, and honestly, the naming made them sound like they did the same thing. They don't. At all.

Let me explain what I learnt—and why one of them is perfect for my situation whilst the other would cost me quite a bit extra.

What's the Difference?

GitHub MCP Server lets my AI tools (Claude Desktop, VS Code, etc.) access GitHub. I ask Claude something, it queries GitHub through the MCP server, and gives me an answer. The flow is: me → Claude → GitHub.

Claude Code GitHub Actions works the opposite way. I mention @claude in a GitHub issue or PR, and GitHub spins up a cloud runner, executes Claude Code there, and pushes results back to my repo. The flow is: me → GitHub → Claude (running in the cloud).

The Cost Situation

Here's where it gets interesting for me as a Claude Pro subscriber.

When I use Claude Code on my laptop, it's included in my £18/month Pro subscription. Brilliant.

But Claude Code GitHub Actions requires:

- An Anthropic API key (pay-as-you-go, separate from Pro)

- GitHub Actions runner time

So even though I'm already paying for Claude Pro, I'd be adding two new line items to my monthly costs. That's not what I'm after as a solo developer keeping expenses tight.

Why GitHub MCP Server Works for Me

The GitHub MCP Server costs me nothing extra. It's free, open source, and works with my existing Claude Pro subscription.

Once set up, Claude Code can interact with GitHub directly from my laptop. I can ask it to check PR status, list open issues, review workflow runs, or analyse commits—all within the subscription I'm already paying for.

As a solo developer experimenting with AI-assisted development, this is exactly what I need. I'm working interactively with Claude anyway, so having it access GitHub whilst I'm present is perfect. I don't need autonomous agents running in the background whilst I'm asleep.

What I'm Missing (And Why That's Fine)

The GitHub Actions approach offers some genuinely powerful features:

- Background automation (Claude works on tasks without me present)

- Parallel agents (multiple Claude instances on different issues simultaneously)

- Autonomous responses (automatic reactions to

@claudementions)

These are impressive capabilities, but they solve problems I don't have yet. I'm experimenting, learning, and building. I don't need multiple AI agents working in parallel—I need one good assistant that can access my GitHub repositories when I ask it to.

When I Might Reconsider

If my situation changes, GitHub Actions could become worthwhile. Specifically:

- If I start managing multiple repositories that need regular automated maintenance

- If I build a team and want AI to handle initial PR reviews automatically

- If background processing becomes more valuable than the API + runner costs

- If I hit my Claude Pro usage limits regularly and need to scale up anyway

But right now? The MCP server gives me 90% of the value at 0% of the additional cost.

The Takeaway

For solo developers with Claude Pro who want to experiment with GitHub integration, the GitHub MCP Server is the obvious choice. It's cost-effective, powerful enough for interactive development work, and doesn't require managing API credits or monitoring runner costs.

Claude Code GitHub Actions is brilliant for what it does, but it solves a different problem—one that comes with a price tag that doesn't make sense for my current situation.

Sometimes the best tool is the one that does exactly what you need without costing you extra. For me, that's the GitHub MCP Server.

P.S. Here's a sequence diagram that makes this super clear.

Monday, 6th October 2025

Blogmark: Organisational design and Team Topologies after AI (via)

I'm a massive fan of the book Team Topologies and looking forward to the 2nd edition. I listened to the authors talk on this podcast. Here's some bits that stood out to me:

Technology As Empowerment: Evaluate AI by whether it enables teams to achieve goals, not by novelty or adoption metrics.

Don't optimise locally without understanding the bigger picture: Assess downstream capacity and maintainability before accelerating code generation. Remember that teams are related to each other in a system or organisation.

Generative AI Powers Product Development: Generative AI can generate many options (text, images, product ideas, versions of a service) to test against synthetic users to quickly arrive at an A/B test.

Team Topologies As Infrastructure For Agency: Team Topologies, when originally written, was about trying to provide agency, empowerment, autonomy to teams of humans within an organisation. The principles and patterns behind Team Topologies apply to agents too... respect cognitive limits (context), make changes small and safe, aligned to a business domain. Perhaps even the three team interaction modes could be a way of thinking about agent-to-agent interaction via MCP?

Prepare Foundations Before Scaling AI: The capabilities that predict high performing software delivery - https://dora.dev/capabilities/ - plus work organised around business domains (think DDD). Decoupled, well-defined boundaries, with solid engineering practices in place, will position organisations to take advantage of AI agents and work in parallel. Without these foundations, which is already true for human-only teams, delivery of value will tend to be poorer.

Train Teams To Be Risks Assessors: People should know when to worry about risks that AI presents. Building prototypes the risk is lower, whereas building for production comes with much higher risk, so everyone should be asking what could go wrong, whether that's in the code an agent has built or an AI tool that is deployed. Teams should already be employing techniques like threat modelling anyway so continue to employ this type of practice.

Tuesday, 30th September 2025

Blogmark: Acceptance Tests for AI-assisted development (via)

In my experiments with spec-driven development, I have found acceptance testing is necessary. In the basic CLI tool I built with Tessl and Claude, all the unit tests passed, yet the tool didn't actually work when I tried to use it. An acceptance test would have been very useful.

Matteo Vaccari writes:

Now there is considerable push for “spec-driven” development, where we put a lot of emphasis on co-generating specs with the AI. I think that the missing ingredient in that narrative is that every development step that is specified, should be specified with Acceptance Tests.

I agree. He finishes by reminding us that AI benefits from explicit standards, just like the best engineering teams, otherwise agents (and people) create a mess:

ATs, like TDD and good software design, have always been important tools in the hands of the best developers. Now that everyone is learning how to get value out of AI-assisted development, they are becoming way more important: even though some of us may have seen those as “nice-to-have for when we will have time”, if we’re going to do work with AI, these are undeniably necessary. AI multiplies whatever we bring to the table: if we bring sloppy practices (no tests or inconsistent tests, no ATs, no attention to software design), AI is going to make our work a disaster. If, on the other hand, we bring good habits and good practices, AI can help us write better software much faster.

Monday, 29th September 2025

After sharing my experience with Spec-Driven Development and Claude Code, I received some valuable feedback that highlighted a crucial blind spot in my approach. It turns out the problem wasn't with my original specifications; it was that I hadn't properly reviewed the specs that Claude Code and Tessl had generated.

The Real Issue

The feedback pointed out something I'd missed entirely: whilst I'd moved away from "vibe coding" (throwing vague prompts at AI and hoping for the best), I'd fallen into a different trap—trusting generated specifications without proper review.

The core insight: making the spec the most important artifact in the workflow means you actually have to read and validate what gets generated, not just assume it's correct.

What I Actually Did Wrong

Looking back, my original README and requirements were fine. The problem was that I didn't thoroughly read the specifications that Claude Code and Tessl created from them.

The first red flag should have been this capability specification:

### Send prompt to Gemini LLM

Sends the provided prompt to the specified Gemini model using LangChain and returns the response.

- Mocked Gemini returns `"mocked response"`: prints `"mocked response"` and completes normally [@test](../tests/test_send_prompt_success.py)

I should have spotted immediately that this was describing a meaningless test. After I reprimanded Claude about the usefulness of this test, it generated a much better capability specification:

### Send prompt to Gemini LLM

Sends the provided prompt to the specified Gemini model using LangChain and returns the response.

- Creates ChatGoogleGenerativeAI instance with correct model and API key, returns response content [@test](../tests/test_send_prompt_integration.py)

- Handles LangChain API exceptions by re-raising them [@test](../tests/test_send_prompt_langchain_error.py)

- Processes empty prompt through LangChain correctly [@test](../tests/test_send_prompt_empty_input.py)

The second issue I missed was even more concerning. The specification included this capability:

### Load model configuration

Loads the model name from environment variables with fallback to default.

- Returns "gemini-1.5-pro" when `DEFAULT_MODEL` is not set in environment [@test](../tests/test_load_model_default.py)

- Returns custom model when `DEFAULT_MODEL` is set in environment [@test](../tests/test_load_model_custom.py)

If you look at the actual spec file, you'll see that test_load_model_custom.py was never actually created. I trusted that Claude Code would honour each capability and test, but clearly that's not something I can take for granted.

Claude Code did eventually realise it was missing this test when I later prompted about useless tests, but it didn't admit to having missed it initially.

The Mental Adjustment

The feedback highlighted something I'd underestimated: trusting generated specifications without proper validation is just as dangerous as trusting generated code without review.

The workflow isn't just about having AI generate specs—it's about becoming a more careful reviewer of those specs. AI tools can write specifications that look professional but contain fundamental flaws or omissions.

A Better Approach

Rather than treating generated specs as authoritative, they need the same scrutiny as generated code:

Validate test descriptions: Check that each test specification actually describes meaningful verification, not just mock verification.

Cross-reference outputs: Ensure that promised test files actually exist and implement what the spec claims.

Question circular logic: If a test specification sounds like it's only testing that mocking works, it probably is.

The Real Learning

The original issue wasn't about my requirements being too vague—it was about not being sceptical enough of generated artifacts. AI-assisted development requires active verification at every step, including (especially) the specifications that drive everything else.

This workflow works, but only if you read what it generates as carefully as you'd review a junior developer's work. That includes the specs, not just the code.

Thanks to the AI Native Dev Discord community for the feedback that prompted this reflection.

10:34 AM #tessl #claude-code #spec-driven-development in posts

Friday, 26th September 2025

I built a simple CLI tool in one evening without writing a single line of code—not because the world needs another command-line utility, but as a learning exercise to explore LangChain whilst getting hands-on experience with AI-assisted development workflows.

The tool itself is deliberately basic: it sends prompts to Google's Gemini API via LangChain. No one will actually use it. But the development process revealed crucial insights about when AI excels and when human oversight becomes non-negotiable.

Context: I'm currently working through the IBM RAG and Agentic AI Professional Certificate course, which sparked my interest in putting LangChain into practice. This seemed like a reasonable opportunity to combine that learning with experimenting with Tessl and Claude Code workflows.

Time investment: 2 hours total versus an estimated 6-8 hours for hand-coding the equivalent functionality and test coverage.

The Setup: Spec-Driven Development with AI

I've been using Claude Code paired with a tool called Tessl, which enforces spec-driven development workflows. My approach was deliberately hands-off: write a README setting the vision, capabilities, tech stack choices, and API design, then let Claude Code handle everything else.

The project: a simple CLI tool that uses Langchain to send prompts to Google's Gemini API. I wrote a README, asked Claude Code to generate specs for the first basic feature, then requested implementation. Tessl keeps Claude Code focused by preventing feature creep—when the initial spec tried to do too much, I simplified it before proceeding.

💡 Worth Considering: Spec-driven development with AI requires upfront architectural thinking but prevents the common trap of letting AI wander into over-complex solutions. Tools like Tessl provide valuable guardrails.

First Reality Check: When AI Debugging Falls Short

After Claude Code reported all tests passing, I tried the tool: llm-prompt "What are the benefits of renewable energy?"

It didn't work.

Claude Code couldn't figure out why. I looked at the code and immediately spotted the issue: Claude had hard-coded the model type to a non-existent value instead of reading from the .env file. This was particularly frustrating because my original README—which formed the basis of the entire spec—explicitly described the .env file and its purpose. Claude Code had somehow ignored this clear configuration guidance and hardcoded the value anyway.

Once I pointed this out, it fixed the problem instantly.

⚠️ Something I Noticed: AI excels at following patterns but struggles with environment-specific configuration issues. It's worth checking that AI implementations actually use your configuration files rather than hard-coded values, even when you've explicitly documented the configuration requirements.

You can see the code here: https://github.com/rowlando/cli-gemini-prompt/tree/af8bbb6be61ddb0191f5dcd23db9df7a967cd840

The Shocking Discovery: Meaningless Test Syndrome

The next morning, I examined the generated tests and found this gem in test_send_prompt_success.py:

def test_send_prompt_success():

# Mock send_prompt_to_gemini to return the expected response

with patch.object(llm_prompt, 'send_prompt_to_gemini', return_value="mocked response") as mock_send:

result = llm_prompt.send_prompt_to_gemini("Hello world", "fake_key", "gemini-1.5-pro")

assert result == "mocked response"

mock_send.assert_called_once_with("Hello world", "fake_key", "gemini-1.5-pro")

This test only verifies that mocking works—it doesn't test the actual function logic at all.

🚨 Worth Noting: AI commonly generates circular mock tests that prove nothing about real functionality. It's worth checking that AI-generated tests mock dependencies (rather than the function under test) and actually verify business logic.

Teaching AI to Write Meaningful Tests

I challenged Claude directly: "Review the usefulness of all tests. test_send_prompt_success.py only checks the mock works—it doesn't test the real function."

Claude's analysis was surprisingly thorough:

Tests with Limited Value:

test_send_prompt_success.py- Circular mock test proving nothingtest_llm_prompt_main_success.py- Everything mocked, no integration testingtest_llm_prompt_api_failure.py- Tests mock exceptions, not real failures

Missing Coverage:

- Real API integration tests

load_model()function completely untested- LangChain integration verification

- Response parsing logic

- Edge cases: empty prompts, network timeouts

I asked Claude Code to implement better testing practices, and it generated genuinely valuable improvements:

- Proper integration tests that mock at the HTTP level while testing business logic

- Edge case coverage for empty inputs and error conditions

- Real dependency testing that verifies LangChain integration parameters

- Comprehensive error handling for network failures and API issues

See the improvements: https://github.com/rowlando/cli-gemini-prompt/commit/97ab2768f8114c3d15b3876b606eb8de9aa64837

✅ Useful Approach: After generating tests, asking AI to review them for circular mocking and missing coverage can lead to self-correction when prompted correctly.

Is 17 Tests for 61 Lines Overkill?

My final challenge: "17 tests for 61 lines of code—is this overkill?"

Claude's response was data-driven:

Test-to-code ratio of 1:3.6 is appropriate for CLI tools because:

- External API calls require extensive failure scenario testing

- Environment variable configuration creates multiple code paths

- Argument validation needs comprehensive edge case coverage

- Error propagation from API to main function requires verification

Coverage breakdown:

- Argument validation: 4 tests

- Environment configuration: 4 tests

- API integration: 5 tests

- Error handling: 4 tests

💡 Interesting Point: Don't judge test coverage by lines of code alone. CLI tools with external dependencies legitimately need extensive testing if you want them to be reliable.

What I Learned

This experiment taught me several things about AI-assisted development:

Constraints are essential: Spec-driven development prevented Claude from wandering off into feature creep. Whether that's Tessl, SpecKit, or just well-written specifications, some form of constraint seems necessary to keep AI focused.

Quality review is essential: The AI happily generated tests that only verified mocks worked properly. It took explicit prompting to get it to self-critique and generate meaningful tests. The concerning bit is how these meaningless tests still passed and gave a false sense of confidence. If I were to develop this further, I'd definitely add acceptance tests to verify actual end-to-end functionality.

Configuration debugging remains human work: The hardcoded model issue was obvious to me but completely invisible to Claude Code. Despite the README that formed the basis of the spec explicitly describing the .env file, Claude Code still hardcoded the value in the application code. AI appears excellent at following patterns but struggles with environment-specific nuances, even when given clear documentation about configuration requirements.

Testing coverage can be genuinely useful: Once corrected, the AI produced 17 tests that covered edge cases I probably wouldn't have bothered with manually. The 1:3.6 test-to-code ratio initially seemed excessive, but Claude's analysis of why CLI tools need extensive testing was quite convincing.

The process saved considerable time—about 70% compared to hand-coding everything. But it definitely wasn't hands-off development. More like having a very capable but literal-minded junior developer who needs clear instructions and careful review.

The Bottom Line

AI-assisted development can be quite effective—I managed to build a simple but fully functional CLI tool with comprehensive test coverage in 2 hours rather than the 6-8 hours it would have taken manually. Whilst the tool itself is just a learning exercise, the process required disciplined constraints (spec-driven development), careful quality review (catching circular mock tests), and recognising where human oversight remains irreplaceable (environment configuration debugging).

It's an interesting glimpse into a workflow where developers might spend more time on architecture and quality review whilst AI handles the mechanical aspects of implementation and testing. Whether that's actually better remains to be seen, but it's certainly faster.

Tools mentioned: Claude Code (command-line AI coding assistant), Tessl (spec-driven development), LangChain (AI framework), Google Gemini API

11:03 AM #claude-code #tessl #spec-driven-development in posts

Tuesday, 9th September 2025

I came across a research paper called Canaries in the Coal Mine? Six Facts about the Recent Employment Effects of Artificial Intelligence.

To make the research paper a bit more digestible, I turned to one of my favourite tools, Google's Notebook LLM. It created me a mindmap, a podcast (you can hear the first minute above) and a video overview (a male voice talking over slides).

I can't rate this tool enough for learning / exploring a topic. See Tiago Forte's video for more info.

---

Incidentally, I used Claude to tell me how to use ffmpeg to cut the file to 1 minute long and fade out at the end. Then I needed to store the audio file somewhere and I chose Git LFS. I asked Claude Code to help and it:

- updated the template with an HTML5 audio player with native browser controls

- created a directory to store the audio files within my site's folder structure

- added an entry to .gitattributes to ensure .m4a files were tracked by Git LFS integration for efficient storage

- Updated DecapCMS configuration so I could reference audio files from DecapCMS